How to make solution templates with NuGet packages

Visual studio templates capabilities are quite limited. Yes, you can save some work but you basically can only do projects and files with heavy limitations. What about if we put some executable code in to the equation?

The Problem

Within our organization (NuGetting Soft), there is a typical VS Solution that developers need to create quite often. It has 3 projects:

- Interface with all public stuff, interfaces, service parameter and return types.

- Domain with the actual logic and all private implementation stuff.

- Tests with all unit tests.

More than just saving people time, we want all the solutions to be arranged in a particular manner with assemblies and namespaces named accordingly to the specific domain being implemented. If some team wants to build something for Human Resources we want: NuGettingSoft.HumanResources.Interface for the interface, NuGeetingSoft.HumanResources for the domain and HumanResources.Tests for the unit tests.

NuGetting

NuGet packages are an easy and clean way to deploy libraries. I recently discover you can put some code in there as well. Powershell scripts, which are quite powerful and not hard at all to get started with.

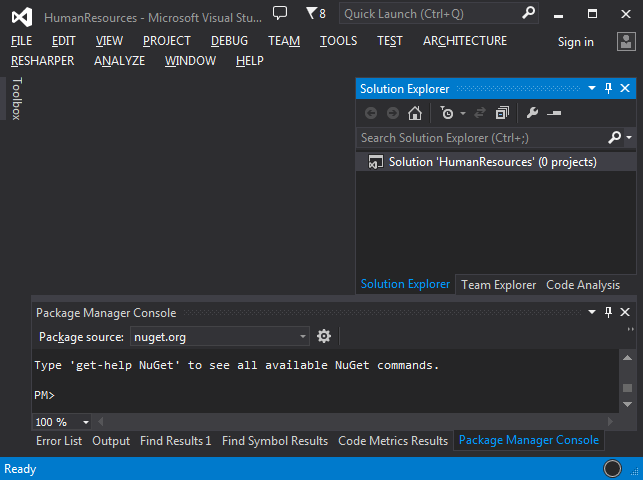

You’d need to install NuGet and make sure it is accessible from the command line. Then go ahead and create a VS Solution with a Project and open the Package Manager Console (PM). We see something like this:

Create a Tools\Init.ps1 file:

Param($installPath, $toolsPath, $package, $project)

Write-Host $installPath

Write-Host $toolsPathNot very useful but I promise it will grow. This Powershell file will be executed the first time a package is installed and every time the solution is loaded afterwards. Powershell is the language we can use to program custom operations in NuGet packages.

Now we create a nuspec file:

<package>

<metadata>

<id>NuGetting</id>

<version>1.0.0.0</version>

<authors>NuGetteer</authors>

<owners>NuGetteer</owners>

<requireLicenseAcceptance>false</requireLicenseAcceptance>

<description>Fiddle with Nuget scripts</description>

</metadata>

<files>

<file src="Tools\**\*.*" target="Tools"/>

</files>

</package>

Once packed, we will have a NuGet package that would only execute our Init.ps1 file. Let’s pack it and install it in our own solution. Go to the PM and execute:

NuGet pack NuGetting\NuGetting.nuspecWe get something like this:

Attempting to build package from 'NuGetting.nuspec'.

Successfully created package 'C:\Path\NuGetting.1.0.0.0.nupkg'.We now install our newly created package:

Install-Package NuGetting -Source 'C:\Path\'Voilà. We get the following output:

Installing 'NuGetting 1.0.0.0'.

Successfully installed 'NuGetting 1.0.0.0'.

C:\Path\NuGetting.1.0.0.0

C:\Path\NuGetting.1.0.0.0\toolsAs you can see, we get the 2 lines we wrote with the Write-Host commands. We need to uninstall the package so we can continue fiddling with it until we are tired:

Uninstall-Package NuGettingCrafting a template

Let’s create a solution that would look exactly like the one we need:

We put everything in there: all the projects and references between projects. Then we close the solution and start with the fun part.

First clean up: delete all compiled binaries (obj and bin), anything else your IDEs might have left around. All these are usual garbage.

Then we must edit all the files that contain information we want to be modified when applying the template.

Start with the solution file

Just at the top of the file you’d find the lines that matter:

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") =

"Interface", "Interface\Interface.csproj", "%Interface.ProjectId%"

EndProject

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") =

"Domain", "Domain\Domain.csproj", "%Domain.ProjectId%"

EndProject

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") =

"Tests", "Tests\Tests.csproj", "%Tests.ProjectId%"

EndProjectAnd the GlobalSection too:

%Interface.ProjectId%.Debug|Any CPU.ActiveCfg = Debug|Any CPUWe’ve replaced the project Ids with tokens (e.g: %Interface.ProjectId%). Project names and paths could be replaced too, but for simplicity we won’t. I recommend not touching the Ids on the left, no idea what they are.

Projects

Here we need to change the project Id (use guid’s curly braces format for these ones, format specifier: "b"), root namespace and assembly name:

<PropertyGroup>

<ProjectGuid>%Interface.ProjectId%</ProjectGuid>

<RootNamespace>NuGettingSoft.%Domain.Name%</RootNamespace>

<AssemblyName>NuGettingSoft.%Domain.Name%.Interface</AssemblyName>

</PropertyGroup>And the references:

<ProjectReference Include="..\Interface\Interface.csproj">

<Project>%Interface.ProjectId%</Project>

<Name>Interface</Name>

</ProjectReference>We do the same for all other projects.

Code Files

We do the same here. In our example we just have the AssemblyInfo.cs files. Here we need to set the assembly Id (use guid’s digits format for these ones, format specifier: "d"):

[assembly: AssemblyTitle("NuGettingSoft.%Domain.Name%.Interface")]

[assembly: AssemblyProduct("NuGettingSoft.%Domain.Name%.Interface")]

[assembly: Guid("%Interface.AssemblyId")]Template is ready!! Now we need to work on how to apply it.

The Core

Create a NuGet package with an Init.ps1 file to apply the template. Let’s walk thru the script file contents.

Define the token values

$tokens = @{

"Domain.Name" = $domainName

"Domain.ProjectId" = Create-ProjectId

"Interface.ProjectId" = Create-ProjectId

"Tests.ProjectId" = Create-ProjectId

"Domain.AssemblyId" = Create-AssemblyId

"Interface.AssemblyId" = Create-AssemblyId

"Tests.AssemblyId" = Create-AssemblyId

}These Create-ProjectId and Create-AssemblyId guys just generate a guid and format’em as I said before, either "b" or "d".

Apply all templates

To all files in the template folder, for that we need to know where the script is executing form. Remember the $toolsPath argument? Let’s grab it and use it:

Apply-All-Templates "$toolsPath\.." .Here we’ve just asked to apply all templates and drop the results in the solution folder.

Function Apply-All-Templates($sourcePath, $destinationPath) {

New-Item $sourcePath\Output -type Directory

Get-ChildItem $sourcePath\Template -Recurse `

| Where { -not $_.PSIsContainer } `

| ForEach-Object {

$source = $_.FullName

$destination = $source -replace "\\Template\\", "\Output\"

Apply-Template $source $destination $tokens

}

Robocopy $sourcePath\Output $destinationPath * /S

Remove-Item $sourcePath\Output -Recurse

}I seriously hope I’ve done a good job and previous code wouldn’t need much explanation. The idea is to iterate by all files and call Apply-Template on each of them dropping the result on a temporary folder. Later move that folder content into $destinationPath and clear all our footsteps.

File by file

Function Apply-Template($source, $destination, $tokens) {

New-Item $destination -Force -Type File

(Get-Content $source) `

| Replace-Tokens $tokens `

| Out-File -Encoding ASCII $destination

}Simple, goes line by line and replaces all tokens it can find. The output is ASCII encoded because I’ve found problems in the past with default (which I am guessing it would be UTF-8).

Replace tokens

Function Replace-Tokens($tokens) {

Process {

$result = $_

$match = [regex]::Match($_, "%((?:\w|\.)*)%")

While ($match.Success) {

Foreach($capture in $match.Captures) {

$token = $capture.Groups[1].Value

If ($tokens.ContainsKey($token)) {

$replacement = $tokens.Get_Item($token)

$result = $result -replace "%$token%", $replacement

}

}

$match = $match.NextMatch()

}

$result

}

}This one works over the pipeline. It iterates all keys in $tokens, regex’em to the input and replaces them by their value if found.

Add projects to solution

After all templates have been applied we just need to add each of the projects to the solution. Since there are dependencies we need to specify the order. Now that we have only 3 projects, it can be done by hand, but perhaps an automatic process can be used if that number grows.

@("Interface", "Domain", "Tests") `

| ForEach-Object { Add-Project-To-Solution $_ }And of course:

Function Add-Project-To-Solution($project) {

$projectPath = Resolve-Path "$project\$project.csproj"

$dte.Solution.AddFromFile($projectPath, $false)

}Here we use a $dte, and object available from PM. All interactions with VS can be done thru it, even activating menu items. Read about the dte here.

Last but not least

Uninstall-Package $package.IdWeird, right? The thing is Init.ps1 will be executed every time the solution is opened. We don’t want the templates applying everyday, potencially erasing all work done. Besides, this package provides nothing once installed. Other techniques to detect that templates have been applied could be used instead…and even allow more templating with package updates. But for now, let’s keep it simple.

Here we also used another object $package this one is a NuGet.OptimizedZipPackage, you can read more about it here. It has zillions of properties and methods. You’ll need to navigate the hierarchy or reflect compiled NuGet to get the whole list. I’ve found these to be very useful:

class OptimizedZipPackage {

string Id { get; }

string Title { get; }

IEnumerable<string> Authors { get; }

IEnumerable<string> Owners { get; }

string Summary { get; }

string Description { get; }

}You can get your hands into the $project if the package would have been installed into a project. You would find info about it here.

Wrap it up

That was pretty much of the heavy artillery, there are some more helper functions out there but they are not worthy to be shown here. I’ve extracted all these functions into a library file: Template-Tools.ps1, as I’am likely to need them again. That will actually trigger a warning when NuGet packing by saying that it’s an Unrecognized PowerScript file, just pay no attention to it.

Before we NuGet pack it, all we need is a nuspec file:

<package>

<metadata>

<id>NuGettingSoft.SolutionTemplate</id>

<version>1.0.0.0</version>

<authors>NuGetteer</authors>

<owners>NuGetteer</owners>

<requireLicenseAcceptance>false</requireLicenseAcceptance>

<description>NuGettingSoft Solution Template</description>

</metadata>

<files>

<file src="Template\**\*.*" target="Tempalte"/>

<file src="Tools\**\*.*" target="Tools"/>

</files>

</package>

Now we run:

NuGet pack NuGettingSoft.SolutionTemplate.nuspecMove the resulting NuGettingSoft.SolutionTemplate.nupkg file into our organization’s private and secret NuGet repository. Our job here is done.

The Solution

Our new Omega team has been appointed to build the Domain for our Human Resources department. Sam, one of their developers, goes and creates an empty solution named HumanResources:

Then he runs on the PM:

Install-Package NuGettingSoft.SolutionTemplateWhen the process ends, he gets:

Love Automation…Don’t you?

Conclusions

NuGet packages give an organization a powerful scaffolding tool which could greatly improve uniformity and productivity. A little bit of imagination on top of it could take you anywhere. Tomorrow you might be generating very large shells with no sweat.

As usual, with power comes responsibility. You will be deploying executable code. Think the developer installing your package might be running VS as Administrator.

QA 4 Code

QA 4 Code